2025

Type of resources

Available actions

Topics

Keywords

Contact for the resource

Provided by

Years

Formats

Representation types

Update frequencies

status

Service types

Scale

Resolution

-

In October 2019 we chose 15 sites from the 2019 EVHOE survey for environmental DNA (eDNA) sampling. The French international EVHOE bottom trawl survey is carried out annually during autumn in the BoB to monitor demersal fish resources. At each site, we sampled seawater using Niskin bottles deployed with a circular rosette. There were nine bottles on the rosette, each of them able to hold ∼5 l of water. At each site, we first cleaned the circular rosette and bottles with freshwater, then lowered the rosette (with bottles open) to 5 m above the sea bottom, and finally closed the bottles remotely from the boat. The 45 l of sampled water was transferred to four disposable and sterilized plastic bags of 11.25 l each to perform the filtration on-board in a laboratory dedicated to the processing of eDNA samples. To speed up the filtration process, we used two identical filtration devices, each composed of an Athena® peristaltic pump (Proactive Environmental Products LLC, Bradenton, Florida, USA; nominal flow of 1.0 l min–1 ), a VigiDNA 0.20 μm filtration capsule (SPYGEN, le Bourget du Lac, France), and disposable sterile tubing. Each filtration device filtered the water contained in two plastic bags (22.5 l), which represent two replicates per sampling site. We followed a rigorous protocol to avoid contamination during fieldwork, using disposable gloves and single-use filtration equipment and plastic bags to process each water sample. At the end of each filtration, we emptied the water inside the capsule that we replaced by 80 ml of CL1 conservation buffer and stored the samples at room temperature following the specifications of the manufacturer (SPYGEN, Le Bourget du Lac, France). We processed the eDNA capsules at SPYGEN, following the protocol proposed by Polanco-Fernández et al., (2020). Half of the extracted DNA was processed by Sinsoma using newly developped ddPCR assays for European seabass (Dicentrachus labrax), European hake (Merluccius merluccius) and blackspot seabream (Pagellus bogaraveo). The other half of the extracted DNA was analysed using metabarcoding with teleo primer. The raw metabarcoding data set is available at https://www.doi.org/10.16904/envidat.442 Bottom trawling using a GOV trawl was carried out before or after water sampling. The catch was sorted by species and catches in numbers and weight were recorded. No blackspot seabream individuals were caught. Data content: * ddPCR/: contains the ddPCR counts and DNA concentrations for each sample and species. * SampleInfo/: contains the filter volume for each eDNA sample. * StationInfo/: contains metadata related to the data collected in the field for each filter. * Metabarcoding/: contains metabarcoding results for teleoprimer. * Trawldata/: contains catch data in numbers and weight (kg).

-

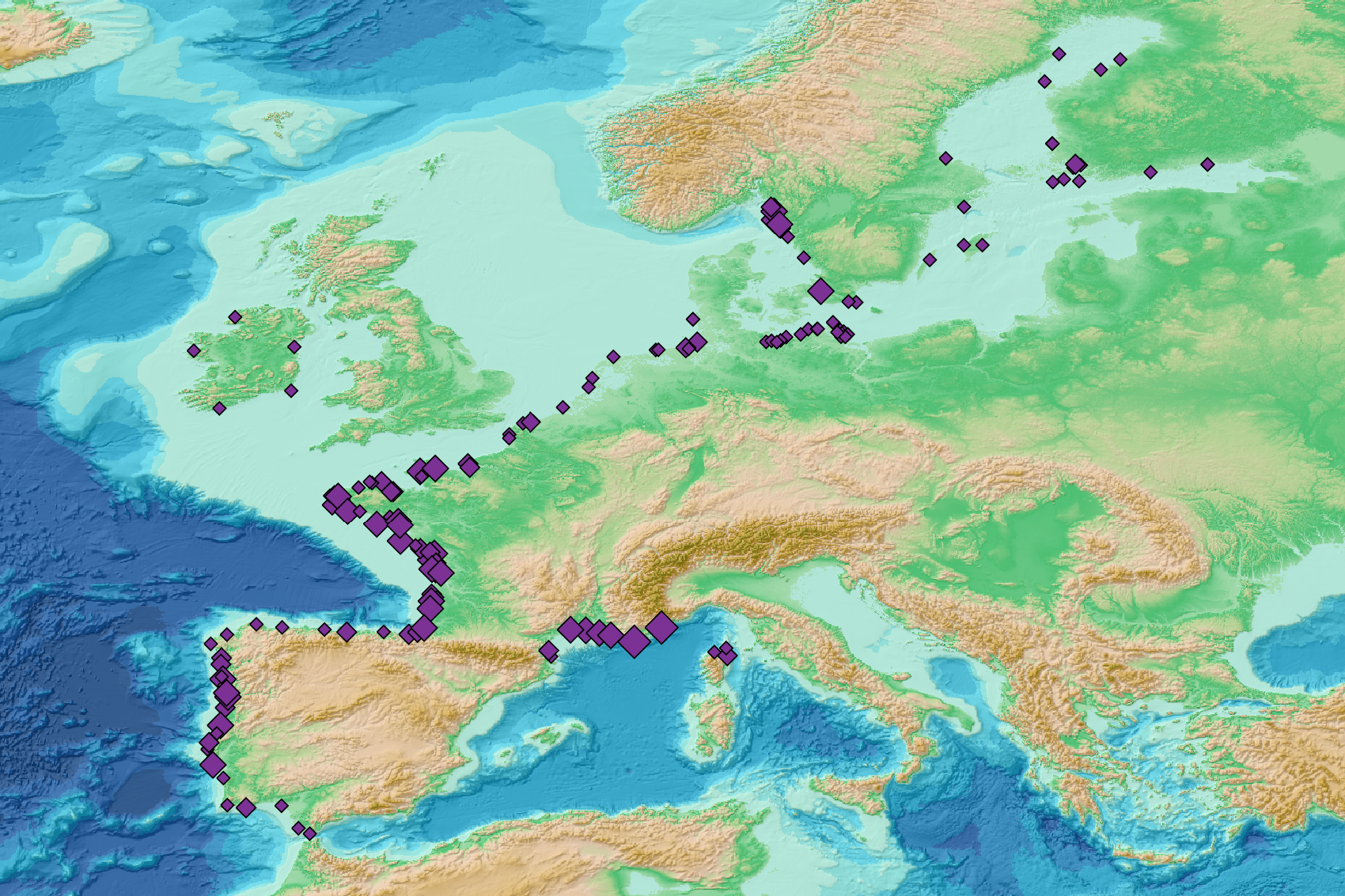

This visualization product displays the total abundance of marine macro-litter (> 2.5cm) per beach per year from Marine Strategy Framework Directive (MSFD) monitoring surveys. EMODnet Chemistry included the collection of marine litter in its 3rd phase. Since the beginning of 2018, data of beach litter have been gathered and processed in the EMODnet Chemistry Marine Litter Database (MLDB). The harmonization of all the data has been the most challenging task considering the heterogeneity of the data sources, sampling protocols and reference lists used on a European scale. Preliminary processings were necessary to harmonize all the data: - Exclusion of OSPAR 1000 protocol: in order to follow the approach of OSPAR that it is not including these data anymore in the monitoring; - Selection of MSFD surveys only (exclusion of other monitoring, cleaning and research operations); - Exclusion of beaches without coordinates; - Some categories & some litter types like organic litter, small fragments (paraffin and wax; items > 2.5cm) and pollutants have been removed. The list of selected items is attached to this metadata. This list was created using EU Marine Beach Litter Baselines, the European Threshold Value for Macro Litter on Coastlines and the Joint list of litter categories for marine macro-litter monitoring from JRC (these three documents are attached to this metadata); - Normalization of survey lengths to 100m & 1 survey / year: in some cases, the survey length was not exactly 100m, so in order to be able to compare the abundance of litter from different beaches a normalization is applied using this formula: Number of items (normalized by 100 m) = Number of litter per items x (100 / survey length) Then, this normalized number of items is summed to obtain the total normalized number of litter for each survey. Finally, the median abundance for each beach and year is calculated from these normalized abundances per survey. Sometimes the survey length was null or equal to 0. Assuming that the MSFD protocol has been applied, the length has been set at 100m in these cases. Percentiles 50, 75, 95 & 99 have been calculated taking into account MSFD data for all years. More information is available in the attached documents. Warning: the absence of data on the map does not necessarily mean that it does not exist, but that no information has been entered in the Marine Litter Database for this area.

-

'''DEFINITION''' Estimates of Ocean Heat Content (OHC) are obtained from integrated differences of the measured temperature and a climatology along a vertical profile in the ocean (von Schuckmann et al., 2018). The products used include three global reanalyses: GLORYS, C-GLORS, ORAS5 (GLOBAL_MULTIYEAR_PHY_ENS_001_031) and two in situ based reprocessed products: CORA5.2 (INSITU_GLO_PHY_TS_OA_MY_013_052) , ARMOR-3D (MULTIOBS_GLO_PHY_TSUV_3D_MYNRT_015_012). Additionally, the time series based on the method of von Schuckmann and Le Traon (2011) has been added. The regional OHC values are then averaged from 60°S-60°N aiming i) to obtain the mean OHC as expressed in Joules per meter square (J/m2) to monitor the large-scale variability and change. ii) to monitor the amount of energy in the form of heat stored in the ocean (i.e. the change of OHC in time), expressed in Watt per square meter (W/m2). Ocean heat content is one of the six Global Climate Indicators recommended by the World Meterological Organisation for Sustainable Development Goal 13 implementation (WMO, 2017). '''CONTEXT''' Knowing how much and where heat energy is stored and released in the ocean is essential for understanding the contemporary Earth system state, variability and change, as the ocean shapes our perspectives for the future (von Schuckmann et al., 2020). Variations in OHC can induce changes in ocean stratification, currents, sea ice and ice shelfs (IPCC, 2019; 2021); they set time scales and dominate Earth system adjustments to climate variability and change (Hansen et al., 2011); they are a key player in ocean-atmosphere interactions and sea level change (WCRP, 2018) and they can impact marine ecosystems and human livelihoods (IPCC, 2019). '''CMEMS KEY FINDINGS''' Since the year 2005, the upper (0-700m) near-global (60°S-60°N) ocean warms at a rate of 0.6 ± 0.1 W/m2. Note: The key findings will be updated annually in November, in line with OMI evolutions. '''DOI (product):''' https://doi.org/10.48670/moi-00234

-

This visualization product displays nets locations where research and monitoring protocols have been applied to collate data on microlitter. Mesh size used with these protocols have been indicated with different colors in the map. EMODnet Chemistry included the collection of marine litter in its 3rd phase. Before 2021, there was no coordinated effort at the regional or European scale for micro-litter. Given this situation, EMODnet Chemistry proposed to adopt the data gathering and data management approach as generally applied for marine data, i.e., populating metadata and data in the CDI Data Discovery and Access service using dedicated SeaDataNet data transport formats. EMODnet Chemistry is currently the official EU collector of micro-litter data from Marine Strategy Framework Directive (MSFD) National Monitoring activities (descriptor 10). A series of specific standard vocabularies or standard terms related to micro-litter have been added to SeaDataNet NVS (NERC Vocabulary Server) Common Vocabularies to describe the micro-litter. European micro-litter data are collected by the National Oceanographic Data Centres (NODCs). Micro-litter map products are generated from NODCs data after a test of the aggregated collection including data and data format checks and data harmonization. A filter is applied to represent only micro-litter sampled according to research and monitoring protocols as MSFD monitoring. Warning: the absence of data on the map does not necessarily mean that they do not exist, but that no information has been entered in the National Oceanographic Data Centre (NODC) for this area.

-

Rocch, the french "mussel watch", provides regulatory data for shellfish area quality management. Once a year, molluscs (mainly mussels and oysters) were sampled at fixed periods (currently mid-February, with a tolerance of one tide before and after the target date) on 70 to 80 monitoring stations in areas used as bivalve molluscs production. For each monitoring station, molluscs are collected in wild beds or facilities, ensuring a minimum stay of 6 months on-site before sampling. The individuals selected are adults of a single species and uniform size (30 to 60 mm long for mussels, 2 to 3 years old for oysters, and commercial size for other species). A minimum of 50 mussels (and other species of similar size) or 10 oysters is required to constitute a representative pooled sample. Lead, mercury, cadmium, PAHs, PCBs, dioxins and, since 2023, regulated PFASs are analysed in molluscs tissues.

-

Rocch, the french "mussel watch", provides chemical data for marine quality management. Once a year, trace metals, organic compounds (chlorinated, PAH, brominated flame retardants, perfluorinated compounds, organotins ...) are analysed in molluscs tissues to check chemical quality according to European Framework Directives and to Regional Seas Convention (OSPAR).

-

The database displays the field measurements recover during a one-week experiment in October 2021 on the Socoa's rocky platform, France. The general objective of the study was to evaluate the momentum balance over the rocky platform, in particular to quantifiy the combined effect of high seabed roughness and waves on the coupling between circulation, wave force and mean water level. The analysis is performed along a single cross-shore transect. The data include: - the cross-shore bathymetric profile - the time-series of integrated wave parameters, local mean water depth and wave-averaged currents over successive 30-min bursts - the depth- and wave-averaged momentum fluxes following the formulation proposed by Smith 2006 and Bruneau et al. 2011. The fluxes are median values obtained over significant wave height to depth ratio bins

-

The PHYTOBS-Network dataset includes long-term time series on marine microphytoplankton, since 1987, along the whole French metropolitan coast. Microphytoplankton data cover microscopic taxonomic identifications and counts. The whole dataset is available, it includes 25 sampling locations. PHYTOBS-Network studies microphytoplankton diversity in the hydrological context along French coasts under gradients of anthropogenic pressures. PHYTOBS-Network allows to analyse the responses of phytoplankton communities to environmental changes, to assess the quality of the coastal environment through indicators, to define ecological niches, to detect variations in bloom phenology, and to support any scientific question by providing data. The PHYTOBS-Network provides the scientific community and stakeholders with validated and qualified data, in order to improve knowledge regarding biomass, abundance and composition of marine microphytoplankton in coastal and lagoon waters in their hydrological context. PHYTOBS-Network originates of two networks. The historical REPHY (French Observation and Monitoring program for Phytoplankton and Hydrology in coastal waters) supported by Ifremer since 1984 and the SOMLIT (Service d'observation en milieu littoral) supported by INSU-CNRS since 1995. The monitoring has started in 1987 on some sites and later in others. Hydrological data are provided by REPHY or SOMLIT network as a function of site locations.

-

As part of the marine water quality monitoring of the “Pertuis” and the “baie de l’Aiguillon” (France), commissioned by the OFB and carried out by setec énergie environnement, three monitoring stations were installed. Two of them were set up at the mouths of the Charente and Seudre rivers on February 6 and 27, 2019, respectively, while a third was deployed in the Bay of Aiguillon on March 24, 2021. The dataset presented here concerns the station installed in the Bay of Aiguillon. Measurements are organized into .csv files, with one file per year. Data is collected using a WiMO multiparameter probe, which records the following parameters: • Temperature (-2 to 35 °C) • Conductivity (0 to 100 mS/cm) • Pressure (0 to 30 m) • Turbidity (0 to 4000 NTU) • Dissolved Oxygen (0 to 23 mg/L & 0 to 250 %) • Fluorescence (0 to 500 ppb)

-

The flat oyster Ostrea edulis is a European native species that once covered vast areas in the North Sea, on the Atlantic coast and in other European coastal waters including the Mediterranean region. All these populations have been heavily fished by dredging over the last three centuries. More recently, the emergence of parasites combined with the proliferation of various predators and many human-induced additional stressors have caused a dramatic decrease in the last remaining flat oyster populations. Today, this species has disappeared from many locations in Europe and is registered on the OSPAR (Oslo-Paris Convention for the Protection of the Marine environment of the North-East Atlantic) list of threatened and/or declining species (see https://www.ospar.org/work-areas/bdc/species-habitats/list-of-threatened-declining-species-habitats). In that context, since 2018, the Flat Oyster REcoVERy project (FOREVER) has been promoting the reestablishment of native oysters in Brittany (France). This multi-partner project, involving the CRC (Comité Régional de la Conchyliculture), IFREMER (Institut Français de Recherche pour l’Exploitation de la Mer), ESITC (École Supérieure d’Ingénieurs des Travaux de la Construction) Caen and Cochet Environnement, has consisted of (1) inventorying and evaluating the status of the main wild flat oyster populations across Brittany, (2) making detailed analysis of the two largest oyster beds in the bays of Brest and Quiberon to improve understanding of flat oyster ecology and recruitment variability and to suggest possible ways of improving recruitment, and (3) proposing practical measures for the management of wild beds in partnership with members of the shellfish industry and marine managers. the final report of this project is available on Archimer : https://doi.org/10.13155/79506. This survey is part of the task 1 of the FOREVER, which took place between 2017-2021. Some previous data, acquired with the same methodology and within the same geographic area have been also added to this dataset. These data were collected during 30 intertidal and diving surveys in various bays and inlets of the coast of Bretagne. The localization of these surveys has been guided by the help of historical maps. In the field, the methodology was simple enough to be easily implemented regardless of the configuration of the sampled site. The intertidal survey was conducted at very low tide (tidal range > 100) to sample the 0-1m level. Sampling was carried out randomly or systematically following the low water line. Where possible (in terms of visibility and accessibility), dive surveys were also carried out (0-10m depth), along 100m transects, using the same methodology of counting in a 1m2 quadrat. As often as possible, geo-referenced photographs were taken to show the appearance, density and habitat where Ostrea edulis was present. All these pictures are available in the image bank file. Overall, this dataset contains a total of 300 georeferenced records, where flat oysters have been observed. The dataset file contains also information concerning the surrounding habitat description and is organized according the OSPAR recommendations. This publication gives also a map, under a kml format showing each occurrence and its characteristics. This work was done in the framework of the following research project: " Inventaire, diagnostic écologique et restauration des principaux bancs d’huitres plates en Bretagne : le projet FOREVER. Contrat FEAMP 17/2215675".

Catalogue PIGMA

Catalogue PIGMA